This Week in AI: From Unicorns to K-Pop

Secret ingredient

In a recent post on Platformer, tech journalist Casey Newton laid the groundwork for how he’ll cover AI. His first bullet point?

Be rigorous with definitions.

AI isn’t one thing, but a suite of technologies that are rapidly affecting our lives. Precision is important.

- “The lesson is to be specific when discussing various different technologies. If I’m writing about a large language model, I’ll make sure I say that — and will try not to conflate it with other forms of machine learning.”

Speaking of LLMs, Big Think reminds us that tech like ChatGPT is “a very sophisticated form of auto-complete.” When you have the entire internet as your training data, what seems miraculous is really just speed (which is still a bit miraculous).

Previously, we looked to symbolic AI as a window into the future. But there were problems.

- “Symbolic AI had some successes, but failed spectacularly on a huge range of tasks that seem trivial for humans. Even a task like recognizing a human face was beyond symbolic AI. The reason for this is that recognizing faces is a task that involves perception. Perception is the problem of understanding what we are seeing, hearing, and sensing. Those of us fortunate enough to have no sensory impairments largely take perception for granted — we don’t really think about it, and we certainly don’t associate it with intelligence. But symbolic AI was just the wrong way of trying to solve problems that require perception.”

Enter neural networks. Think of it as modeling the brain instead of the mind. While these networks have been studied since the forties, and today’s models have been around since the eighties, what we’ve gained is…the internet. Lots and lots and lots of data.

Oh, and power. Lots and lots and lots of computational power.

- “GPT-3 had 175 billion parameters in total; GPT-4 reportedly has 1 trillion. By comparison, a human brain has something like 100 billion neurons in total, connected via as many as 1,000 trillion synaptic connections. Vast though current LLMs are, they are still some way from the scale of the human brain.”

Considering humans have been around in our current form for roughly a quarter-million years, and LLMs 70 (accounting for advances that led to its development), there’s still a lot of runway left.

And since LLMs are producing unseen capabilities thanks to the enormous amounts of data they’re being trained on, a “true AI” is not infeasible. We just have to remember that LLMs are just one ingredient—and we have no idea what else in the recipe yet.

Uncommon sense

A driving fear/fascination of AI’s potential is that it teaches itself. A recent paper by Microsoft researchers (cautiously) argues that that GPT-4 might just be doing that.

- The paper claims that GPT-4 is hinting at common sense and reason.

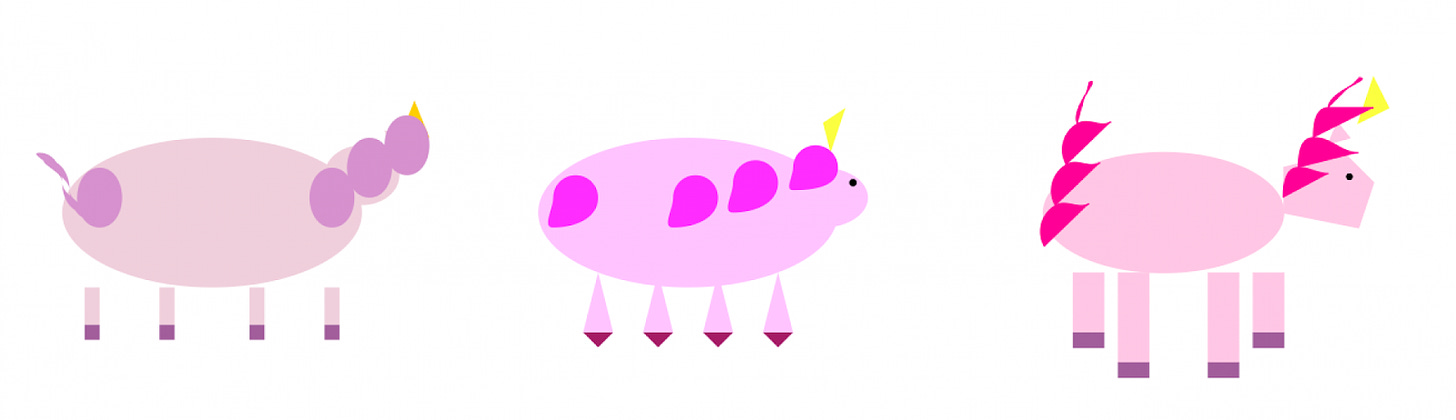

- The research team wanted to differentiate between memorization and actual learning. Over the course of one month, GPT-4 evolved an understanding of how to draw a unicorn.

- “I started off being very skeptical — and that evolved into a sense of frustration, annoyance, maybe even fear,” says Microsoft’s lead researcher, Peter Lee. “You think: Where the heck is this coming from?”

The 155-page paper might not hold up to scientific scrutiny, say the researchers. Still, an LLM showing an ability to “think” abstractly and “reason” is of note.

Thanks for reading Prompts Report! Subscribe for free to receive new posts and support our work.

Subscribed

Universal language

With a lot of emphasis aimed at generative AI’s ability to mimic famous musicians—and potentially monetize it—K-Pop artist MIDNATT has a different goal: to use AI to translate his music into other languages.

- The artist’s first single, “Masquerade,” was released simultaneously in English, Korean, Spanish, Japanese, Chinese, and Vietnamese.

- Lee Hyun, the voice behind MIDNATT, is a veteran singer on the K-Pop scene. He’s making a rare career pivot by using technology to create a new identity.

- His ultimate goal? Accessibility, as some fans don’t like lyrics they can’t understand.

The technology behind the song is Supertone, which was acquired for $36 million earlier this year by K-Pop corporation, HYBE.

- “When I would listen to music in other languages, I couldn’t immerse into the music as well as in my native language, and we were talking about how we could overcome those language barriers,” says Hyun. “The project came to be by talking about these language barriers.”

- When undertaking the project, Hyun believed Vietnamese and Spanish would be the hardest translations. Turns out it was English.

Keep searching

Growing up, I learned how to search for information using the Dewey Decimal system through the technology of card catalogs. Finding what we need today is dramatically different.

One feature pointing the way forward is conversational AI. Instead of the Q&A model that Google has refined and dominated, information in the future might be more like talking to a friend, or perhaps striking up a conversation with the librarian.

Which makes Mustafa Suleyman, cofounder of DeepMind, think that “search” as we know it will disappear within a decade.

- “If I were Google, I would be very worried because the old search engine will not exist in ten years.”

Since selling DeepMind to Google in 2014, Suleyman has remained in AI. In 2019, he became VP of Google—with caveats of his alleged bullying at work (for which he has since apologized).

On his way out of the company, he tried to warn execs that search would have to dramatically change. Instead of “an AI,” he foresees billions of AIs that will suit businesses, industries, and even individuals. And that means how we seek and understand information will shift, and quickly—especially if Google wishes to continue to monetize the search process.

Warnings ahead. As Suleyman tells the No Priors podcast about the future of information acquisition:

- “When we need to read, the content is usually presented in an awkward format because if you stay on the page for 11 seconds, not 5 seconds, then Google thinks this is high-quality, engaging content. So content creators are incentivized to keep you on the page, which is bad for us because we, as humans, need quality, concise, fluent, natural language answers to our questions.”

- “More importantly, we want to be able to update our answers without having to think about how to change our query. We’ve learned “Google language,” a strange vocabulary we’ve co-developed with Google for 20 years. Now, this must stop. This era is over, we can now communicate with computers in fluent, natural language, which is the new search interface.”

- “We believe that in the next few years, everyone will have their own personal AI. These AIs will include business AI, government AI, non-profit AI, political AI, influencer AI, and brand AI. Each AI will have its own goals, aligned with the master’s goals. We believe that, as individuals, we all want our AI to align with our interests. This is what it means to have a personal artificial intelligence, let’s call it Pi, which will be your companion. We started out with an empathetic and supportive style and tried to ask ourselves what makes a good conversation.”

Ill communications

Perhaps the biggest fear around AI is the loss of jobs. And it turns out British telecom giant, BT, is planing to cut 55,000 of them by 2030.

- The jobs will be replaced by AI tools, predominantly in customer service and network management roles. Additionally, fiber engineers and maintenance workers could see a massive reduction.

- AI will take over duties from 40% of the corporation’s staff (including 30,000 contractors). BT currently employs 130,000 people.

- The announcement came a day after another British telecom giant, Vodafone, said it will cut 11,000 jobs over the next 3 years, though its unclear how many of those will be replaced by AI.

Tool(s) of the Week

As ChatGPT, one of the fastest growing applications of all time, continues to shape the tech landscape, it is also causing many tech companies to rethink their business models. Until recently, ChatGPT was primarily drawing knowledge from its internal servers. The game changer? Plugins.